AI Technology Behind 41% of Cyber Incidents in Schools :

In countries like the UK and the US, 41% of schools reported cyber incidents caused by AI. These included a range of incidents, from phishing attacks to generated content, according to the latest report by an identity and access management firm.

Of these 41% of schools, 11% of institutions faced serious disruption, while 30% said they quickly brought the issue under control. This analysis was conducted by TrendCandy for Keeper Security, which surveyed 1,460 administrators.

According to the survey, 82% of schools feel “prepared” to deal with AI cyber incidents. But only a few people are overconfident about their systems. This shows that schools are aware of the risks and that is why there is already a gap in AI safety and preparedness.

Anne Cutler says that all education leaders are concerned about the AI threat but only a few have the confidence to identify it. She says that the problem is not with awareness but with understanding when AI turns from helpful to harmful. Any tools that help students are also misused to create phishing emails and deepfake videos, say such people.

AI Cyber Incidents Overlooked?

These reports are shocking, as 41% of schools have experienced an AI cyber incident. This is surprising, but not unexpected, because just as AI is advancing in this generation, it is also expanding rapidly in the education system without any proper controls, says our director of the Institute for Data Science, David Bader.

They stated, “This data suggests that this is a serious concern, meaning that AI education institutions may face similar challenges without a safety system. Schools are already vulnerable to cyberattacks due to limited staff and budgets. And now, uncontrolled AI tools are increasing their risk.

It’s possible that the number of cases of AI incidents is higher in reality, with only 41% of the cases reported.

James McQuiggan (CISO Advisor, KnowBe4) agrees: Schools are using AI tools without any cybersecurity measures, so these numbers may be conservative.” They say that schools lack resources and governance, so there is a greater risk of data misuse and exposure.

According to Paul Bischoff (Consumer Privacy Advocate, Comparitech), AI is making phishing emails more realistic, making attackers more successful and easier to attack. According to the 2025 Verizon Data Breach Report, 77% of breaches in the education sector are caused by phishing, which is considered the most common cyberattack.

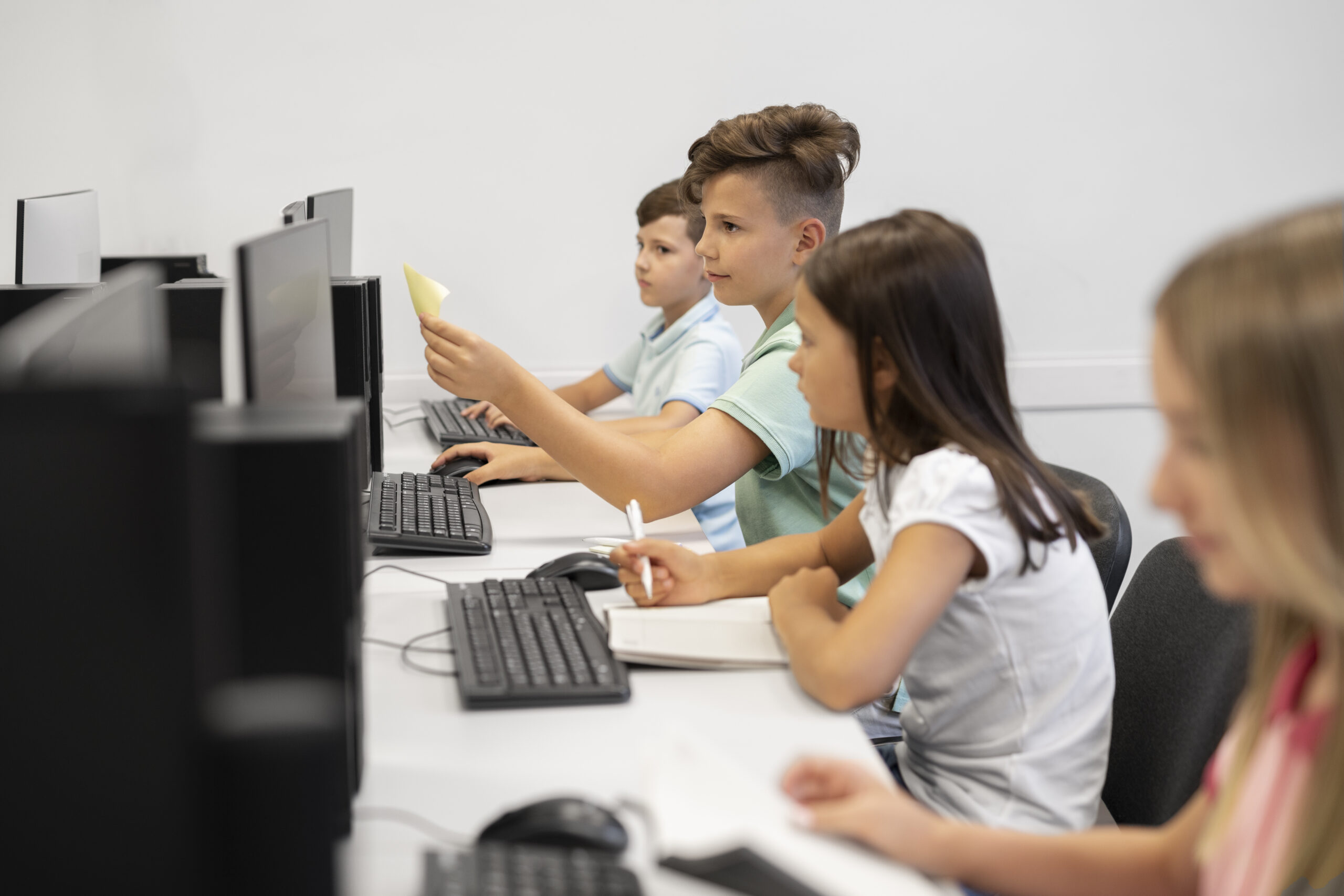

Growing AI Usage in the Education Sector :

A recent study found that AI has become a regular part of classroom and faculty use. In 86% of institutes, students are allowed to use AI tools, while in another 2%, AI tools are not yet permitted or are completely banned. Faculty are mostly using AI tools, and among faculty, this is highly variable: 91% of teachers actively use AI tools.

On the report, students use AI for activities (27%), innovation (60%), and language assistance (49%).

Creative projects (45%) and revision support (40%) are also common, while tasks like coding (30%) and research (62%) are slightly more restricted.

David Bader says, “Students and faculty are increasingly using AI tools, so it’s impossible for schools to adopt them. This is impossible because students are also accessing AI tools from their personal phones, so regardless of school policies, they are being ignored.”

He said, “The problem now is not whether AI should be allowed or not, but how to integrate AI responsibly. Schools will have to take the lead and use it through education, ethics, and guardrails.” He warned that banning AI will have the opposite effect. “Banning AI will achieve nothing; students will use it secretly, robbing them of the opportunity to develop digital literacy skills.”

Sam Whitaker, VP of StudyFetch, explained, “There’s a difference between using AI and responsible AI. If students use ChatGPT or any random AI tools without limits, it could have long-term effects on their creativity and critical thinking.”

He further said, “Schools have a responsibility to provide students with safe and ethical AI tools for learning—not shortcuts to cheating.”

AI Integration Growing Faster Than School Guidelines :

While schools and universities are considering using AI, implementation remains uneven, according to studies. Few institutes are still pursuing policy development. While there is an AI policy (51%) or informal guidance (53%), less than 60% have implemented AI detection tools and student training programs.

Even more concerning is that while more than 40% of schools are prepared for AI incidents, only 37% have an incident response plan and 34% have an AI detector budget. This evidences a significant preparedness gap.

Anne Cutler of Keeper Security said that if schools rely on information guidelines, students and teachers will be confused about how to use AI safely. “The lack of policy means they’re still in catch-up mode, not that schools are afraid of AI,” he said. Schools are adopting AI, but their governance isn’t keeping pace.

Policies provide a framework that balances innovation and accountability—clearly outlining how AI will support learning, protecting sensitive data like student records or intellectual property, and maintaining transparency about when and where AI is and should be used.

University of Michigan researcher Elyse Thulin says AI policies can’t be a “one-size-fits-all” solution. Every institution will need to adapt to its needs and resources. Every technology comes with risks and the potential for misuse, but that doesn’t mean it’s harmful—we just need to develop smart strategies to prevent its misuse.

AI is growing very fast, so continuous research and monitoring are essential. The more we understand the patterns and impacts of AI, the more we can create safe and ethical learning environments.

Real-Time Voice Cloning : 2025

The NCC group of researchers stated in their blog that real-time voice cloning can be used to attack real organizations.

1 Comment

[…] AI Technology Behind 41% of Cyber Incidents in Schools […]